Apache Spark & Zeppelin

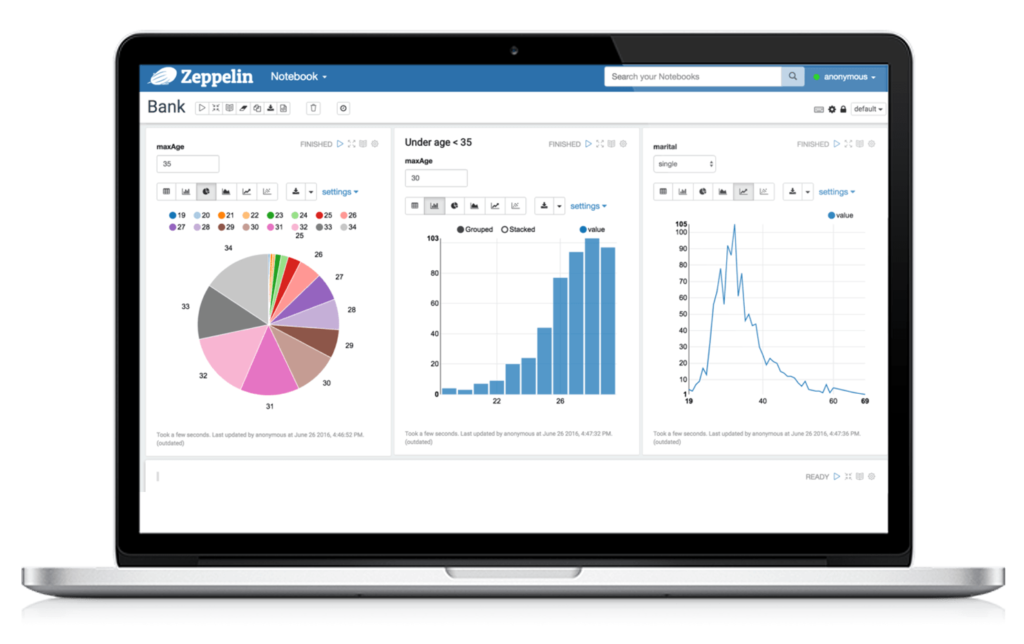

An open-source, web-based "notebook" that enables interactive data analytics and collaborative documents.

Apache Spark and Zeppelin

Apache spark and Zeppelin is an open-source, web-based “notebook” that enables interactive data analytics and collaborative documents. The notebook is integrated with distributed, general-purpose data processing systems such as Apache Spark (Large Scale data processing), Apache Flink (Stream processing framework), and many others. Apache Zeppelin allows you to make beautiful, data-driven, interactive documents with SQL, Scala, R, or Python right in your browser.

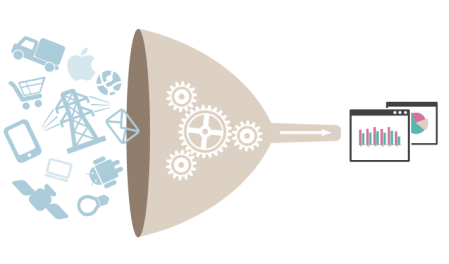

Data Ingestion

Data ingestion in the zeppelin can be done with Hive, HBase, and other interpreters provided by the zeppelin.

Data Discovery

Zeppelin provides Postgres, HawQ, Spark SQL, and other Data discovery tools, with spark SQL the data can be explored.

Data Analytics

Spark, Flink, R, Python, and other useful tools are already available in the zeppelin and the functionality can be extended by simply adding the new interpreter.

Data Visualization & Collaboration

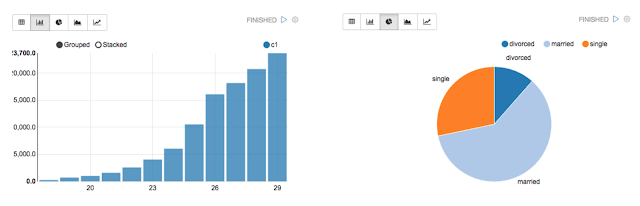

All the basic visualization like Bar chart, Pie chart, Area chart, Line chart and scatter chart are available in a zeppelin.

Apache Spark

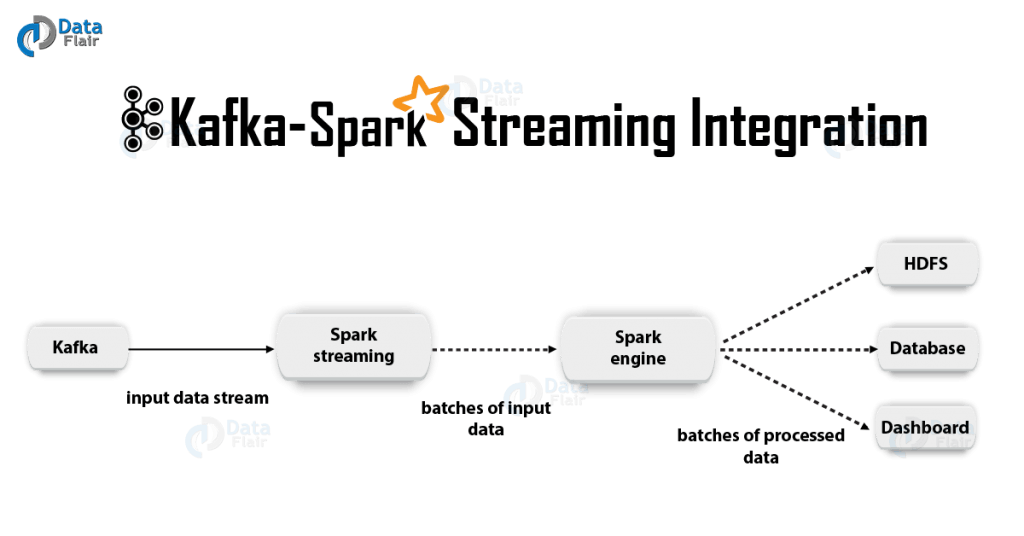

In FileGPS we use the Spark Streaming component integrating with Kafka for data computation.

Apache Spark Streaming

- It is an add-on to core Spark API, allowing scalable, high-throughput, fault-tolerant stream processing of live data streams. Spark can access Kafka, Flume, Kinesis, or TCP socket data. It can operate using various algorithms. Finally, the received data is given to file systems, databases, and live dashboards. Spark uses Micro-batching for real-time streaming.

- Micro-batching is a technique that allows a process or task to treat a stream as a sequence of small batches of data. Hence Spark Streaming groups the live data into small batches. It then delivers it to the batch system for processing. It also provides fault tolerance characteristics.

Apache Spark & Zeppelin - FAQ's

Let us now take a closer look at using zeppelin with spark using an example:

- Create a new note from zeppelin home page with “spark” as default interpreter.

- Before you start with the example, you will need to download the sample csv.

- Transform csv into RDD.

You can restart the interpreter for the notebook in the interpreter bindings (gear in upper right hand corner) by clicking on the restart icon to the left of the interpreter in question (in this case it would be the spark interpreter).